直截了当:展示给我提示符。 [译]

Hamel Husain

通过截获 API 调用,迅速掌握难以解读的大语言模型框架。

作者:Hamel Husain

发布日期:2024 年 2 月 14 日

背景

众多库致力于通过自动重构或创建提示符来优化大语言模型的输出。这些建库宣称能够使大语言模型的输出更加:

- 安全 (例如:安全护栏)

- 可预测 (例如:智能指导)

- 结构化 (例如:指令生成器)

- 鲁棒 (例如:语言链)

- … 或者针对特定指标进行优化 (例如:DSPy)。

这些工具中_某些_共同的思路是鼓励用户不直接与提示符交互。

DSPy:“这开启了一种新范式,语言模型及其提示符退居幕后….重新编译程序时,DSPy 会生成新的有效提示符”

智能指导 “智能指导代表了一种高效控制的编程范式,相比传统提示更为高效…”

即便有些工具不反对使用提示符,我发现要获取这些工具最终发送给语言模型的提示符很有挑战。**这些工具发送给大语言模型的提示是对其操作的自然语言描述,是最快了解它们工作方式的途径。**此外,一些工具使用复杂术语来描述其内部机制,这使得理解它们的操作更加困难。

基于我接下来将解释的原因,我认为大多数人采用以下思维方式会更好:

在本篇博客中,我会教你如何**不需深究文档或源代码,就能截获任何工具的 API 调用及其提示符。**我将通过使用 mitmproxy 的示例,展示如何设置和操作,以便捷地理解我之前提到的工具及其大语言模型的工作原理。

动机:尽可能减少非必要的复杂性

在决定采用某种抽象之前,考虑到非必要复杂性的风险是非常重要的。这一点对于大语言模型(LLM)的应用来说尤其关键,因为与编程的抽象相比,LLM 的抽象往往使用户不得不回到编码上来,而不是用自然语言与 AI 进行交流,这与 LLM 的初衷相悖:

尽管这听起来有些俏皮,但在评估工具时牢记这一点是非常有价值的。工具提供的自动化主要分为两类:

- 代码与 LLM 的结合应用: 通常情况下,通过编码来实现这类自动化是最佳选择,因为任务的执行需要运行代码。例如路由、函数执行、重试、串联等。

- 重构和编写提示: 表达意图通常最适合用自然语言,但也有例外。比如,用代码而不是自然语言来定义一个函数或架构会更加方便。

很多框架都提供了这两种自动化方式。但是,过分依赖第二种方式可能会带来不利影响。通过观察提示,你可以作出以下决定:

- 这个框架是否真的必要?

- 我是否只需复制最终的提示(一个字符串),然后抛弃这个框架?

- 我们能否编写出更好的提示(更简洁,更符合意图等)?

- 这是否是最佳方案(API 调用的数量是否合适)?

根据我的经验,观察提示和 API 调用对于做出明智的选择至关重要。

拦截大语言模型 API 调用

有很多方法可以拦截大语言模型 API 调用,比如修改源代码或寻找对用户友好的设置选项。我发现这些方法往往非常耗时,特别是当源代码和文档的质量参差不齐的时候。毕竟,我只是想看到 API 调用,而不需要深入了解代码是如何工作的!

然而,找到一个既简单又高效的方法并不容易。一个不依赖特定框架的方法是设置一个代理,用来记录你发出的 API 请求。使用mitmproxy,一个免费的开源 HTTPS 代理,可以轻松实现这一点。

配置 mitmproxy

以下是一种特别适合初学者的设置 mitmproxy 的方式,简单易懂,助你快速上手:

-

根据 mitmproxy 官网 上的指南完成安装。

-

在终端中运行

mitmweb命令启动交互式用户界面。请留意日志中显示的用户界面 URL,通常格式为:Web server listening at http://127.0.0.1:8081/。 -

下一步,您需要设置您的设备(比如笔记本电脑),使所有网络流量都通过

mitproxy进行路由,该服务监听在http://localhost:8080。根据官方文档建议:我们推荐您上网搜索如何为您的操作系统配置 HTTP 代理。有些操作系统提供全局设置选项,有些浏览器则有自己的配置方式,还有一些应用可能需要设置环境变量等。

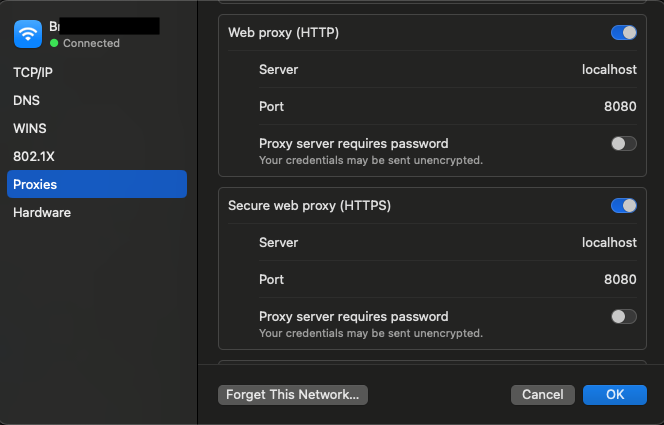

以我的经验为例,我通过谷歌搜索“为 macOS 设置代理”,找到了如下操作方法:

选择 Apple 菜单 > 系统设置,点击侧栏中的网络,选择右侧的一个网络服务,点击“详情”,然后选择“代理”。

通过上述步骤,即使是初学者也能轻松设置并开始使用 mitmproxy。这种方法不仅简化了安装和配置过程,还为用户提供了一种直观的方式来监控和管理网络流量。

我然后在界面的特定位置添加了localhost 和 8080:

-

然后,访问 http://mitm.it,网站将指导你如何安装用于拦截 HTTPS 请求的 mitmproxy 证书授权机构(CA)。如果愿意,你也可以手动完成这一步骤,操作指南请参见此处。请记住 CA 文件的存储位置,我们稍后会用到它。

-

通过访问如 https://mitmproxy.org/ 等网站,你可以测试一切是否正常工作。你应该能在 mtimweb 用户界面中看到相应的输出,对我来说,它的位置是 http://127.0.0.1:8081/(查看终端日志以获取 URL)。

-

完成以上设置后,你可以关闭之前在网络上启用的代理。我是通过切换之前展示的屏幕截图中的代理按钮在我的 Mac 上做到这一点的。这样做是为了限制代理仅适用于 Python 程序,以避免不必要的干扰。

提示:通常,与网络相关的软件会允许你通过设置环境变量来代理外出请求。这就是我们将采取的方法,专门将代理应用于特定的 Python 程序。不过,我还是鼓励你在熟悉操作后,试试看能否在其他类型的程序中找到新的发现!

Python 的环境变量

为了让 requests 和 httpx 库能够通过代理转发流量并为 HTTPS 流量引用 CA 文件,我们需要设置以下环境变量:

重要提示:请确保在执行本博客帖子中任何代码片段之前,先设置好这些环境变量。

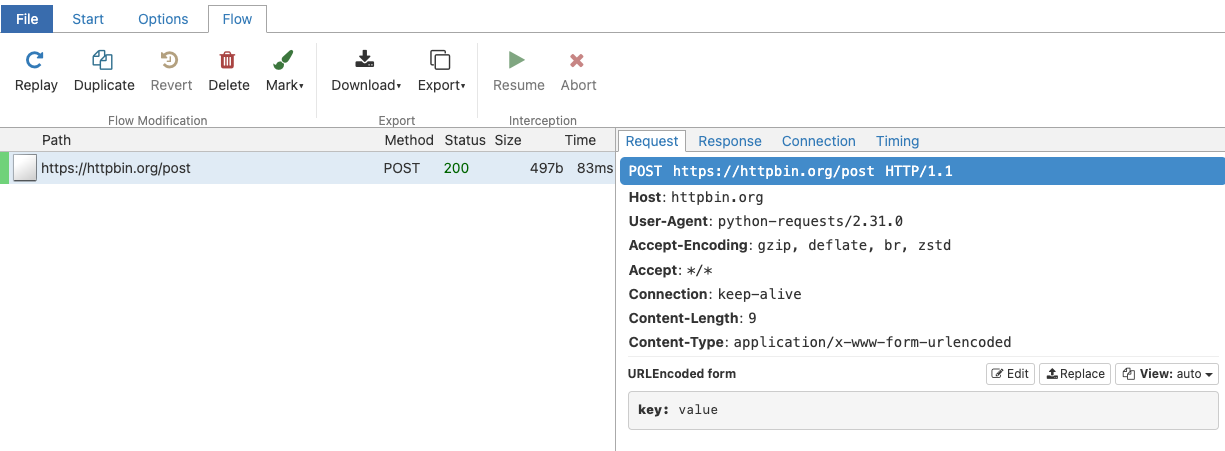

你可以通过执行以下简短的代码来进行基础测试:

这在用户界面中的显示效果如下:

示例

接下来是最有趣的部分,我们将通过运行一些大语言模型库的示例,并截获它们的 API 调用!

安全防护

安全防护功能让你能够定义特定的结构和类型,并利用这些定义来校验和修正大语言模型(LLM)生成的输出。以下是来自 guardrails-ai/guardrails README 的一个入门示例:

from pydantic import BaseModel, Fieldfrom guardrails import Guardimport openaiclass Pet(BaseModel):pet_type: str = Field(description="Species of pet")name: str = Field(description="a unique pet name")prompt = """What kind of pet should I get and what should I name it?${gr.complete_json_suffix_v2}"""guard = Guard.from_pydantic(output_class=Pet, prompt=prompt)validated_output, *rest = guard(llm_api=openai.completions.create,engine="gpt-3.5-turbo-instruct")print(f"{validated_output}")

{"pet_type": "dog","name": "Buddy

这个过程是怎样进行的?结构化输出和验证又是如何实现的呢?通过查看 mitmproxy 的用户界面,我发现上面的代码触发了两次大语言模型 API 的调用,第一次调用用到了这样一个提示:

What kind of pet should I get and what should I name it?Given below is XML that describes the information to extract from this document and the tags to extract it into.<output><string name="pet_type" description="Species of pet"/><string name="name" description="a unique pet name"/></output>ONLY return a valid JSON object (no other text is necessary), where the key of the field in JSON is the `name` attribute of the corresponding XML, and the value is of the type specified by the corresponding XML's tag. The JSON MUST conform to the XML format, including any types and format requests e.g. requests for lists, objects and specific types. Be correct and concise.Here are examples of simple (XML, JSON) pairs that show the expected behavior:- `<string name='foo' format='two-words lower-case' />` => `{'foo': 'example one'}`- `<list name='bar'><string format='upper-case' /></list>` => `{"bar": ['STRING ONE', 'STRING TWO', etc.]}`- `<object name='baz'><string name="foo" format="capitalize two-words" /><integer name="index" format="1-indexed" /></object>` => `{'baz': {'foo': 'Some String', 'index': 1}}`

紧接着,进行了第二次调用,使用了这样一个提示:

I was given the following response, which was not parseable as JSON."{\n \"pet_type\": \"dog\",\n \"name\": \"Buddy"Help me correct this by making it valid JSON.Given below is XML that describes the information to extract from this document and the tags to extract it into.<output><string name="pet_type" description="Species of pet"/><string name="name" description="a unique pet name"/></output>ONLY return a valid JSON object (no other text is necessary), where the key of the field in JSON is the `name` attribute of the corresponding XML, and the value is of the type specified by the corresponding XML's tag. The JSON MUST conform to the XML format, including any types and format requests e.g. requests for lists, objects and specific types. Be correct and concise. If you are unsure anywhere, enter `null`.

确实,要实现结构化输出,过程看起来颇为繁复!我们从中了解到,这个库通过使用 XML 架构来处理结构化输出,而其他方法可能采用函数调用。如果你能在揭示这一“魔法”之后,想出一个更简单或更有效的方案,那将是值得考虑的。无论如何,我们现在对其工作机制有了更深的理解,而且避免了让你陷入不必要的复杂性中,这本身就是一种进步。

指南

指南功能为编写提示语提供了限制性的生成选项和编程结构。让我们通过他们教程中的一个聊天示例深入探讨一番:

import guidancegpt35 = guidance.models.OpenAI("gpt-3.5-turbo")import refrom guidance import gen, select, system, user, assistant@guidancedef plan_for_goal(lm, goal: str):# This is a helper function which we will use belowdef parse_best(prosandcons, options):best = re.search(r'Best=(\d+)', prosandcons)if not best:best = re.search(r'Best.*?(\d+)', 'Best= option is 3')if best:best = int(best.group(1))else:best = 0return options[best]# Some general instruction to the modelwith system():lm += "You are a helpful assistant."# Simulate a simple request from the user# Note that we switch to using 'lm2' here, because these are intermediate steps (so we don't want to overwrite the current lm object)with user():lm2 = lm + f"""\I want to {goal}Can you please generate one option for how to accomplish this?Please make the option very short, at most one line."""# Generate several options. Note that this means several sequential generation requestsn_options = 5with assistant():options = []for i in range(n_options):options.append((lm2 + gen(name='option', temperature=1.0, max_tokens=50))["option"])# Have the user request pros and conswith user():lm2 += f"""\I want to {goal}Can you please comment on the pros and cons of each of the following options, and then pick the best option?---"""for i, opt in enumerate(options):lm2 += f"Option {i}: {opt}\n"lm2 += f"""\---Please discuss each option very briefly (one line for pros, one for cons), and end by saying Best=X, where X is the number of the best option."""# Get the pros and cons from the modelwith assistant():lm2 += gen(name='prosandcons', temperature=0.0, max_tokens=600, stop="Best=") + "Best=" + gen("best", regex="[0-9]+")# The user now extracts the one selected as the best, and asks for a full plan# We switch back to 'lm' because this is the final result we wantwith user():lm += f"""\I want to {goal}Here is my plan: {options[int(lm2["best"])]}Please elaborate on this plan, and tell me how to best accomplish it."""# The plan is generatedwith assistant():lm += gen(name='plan', max_tokens=500)return lm

results = gpt35 + plan_for_goal(goal="read more books")

systemYou are a helpful assistant.userI want to read more booksHere is my plan: Set aside 30 minutes of dedicated reading time each day.Please elaborate on this plan, and tell me how to best accomplish it.assistantSetting aside 30 minutes of dedicated reading time each day is a great plan to read more books. Here are some tips to help you accomplish this goal:1. Establish a routine: Choose a specific time of day that works best for you, whether it's in the morning, during lunch break, or before bed. Consistency is key to forming a habit.2. Create a reading-friendly environment: Find a quiet and comfortable spot where you can focus on your reading without distractions. It could be a cozy corner in your home, a park bench, or a local library.3. Minimize distractions: Put away your phone, turn off the TV, and avoid any other potential interruptions during your dedicated reading time. This will help you stay focused and fully immerse yourself in the book.4. Choose books that interest you: Select books that align with your personal interests, hobbies, or goals. When you're genuinely interested in the subject matter, you'll be more motivated to read regularly.5. Start with manageable goals: If you're new to reading or have a busy schedule, start with a smaller time commitment, such as 15 minutes, and gradually increase it to 30 minutes or more as you become more comfortable.6. Set a timer: Use a timer or a reading app that allows you to track your reading time. This will help you stay accountable and ensure that you dedicate the full 30 minutes to reading.7. Make reading enjoyable: Create a cozy reading atmosphere by lighting a candle, sipping a cup of tea, or playing soft background music. Engaging all your senses can enhance your reading experience.8. Join a book club or reading group: Consider joining a book club or participating in a reading group to connect with fellow book lovers. This can provide additional motivation, discussion opportunities, and book recommendations.9. Keep a reading log: Maintain a record of the books you've read, along with your thoughts and reflections. This can help you track your progress, discover patterns in your reading preferences, and serve as a source of inspiration for future reading.10. Be flexible: While it's important to have a dedicated reading time, be flexible and adaptable. Life can sometimes get busy, so if you miss a day, don't be discouraged. Simply pick up where you left off and continue with your reading routine.Remember, the goal is to enjoy the process of reading and make it a regular part of your life. Happy reading!

看上去真的很不错!但具体是怎么回事呢?这实际上包括了对 OpenAI 的 7 次调用,我把它们整理在了这个 gist 里。这其中有 5 次是大语言模型进行“内部思维”,以产生创意的 API 调用。尽管温度被设置为 1.0,但这些“创意”大部分是重复的。OpenAI 的倒数第二次调用整理出了这些“创意”,如下所示:

I want to read more booksCan you please comment on the pros and cons of each of the following options, and then pick the best option?---Option 0: Set aside dedicated time each day for reading.Option 1: Set aside 30 minutes of dedicated reading time each day.Option 2: Set aside dedicated time each day for reading.Option 3: Set aside dedicated time each day for reading.Option 4: Join a book club.---Please discuss each option very briefly (one line for pros, one for cons), and end by saying Best=X, where X is the number of the best option.

根据我的经验,如果你让语言模型一次性生成创意,效果可能会更好。这样,大语言模型能够参照之前的创意,从而达到更多的多样性。这是一个关于无意中增加复杂性的典型例子:盲目地应用这种设计模式很容易。虽然这不是对这一特定框架的批判——代码已经明确说明了会进行 5 次独立的调用。不过,检查你的工作通过审查 API 调用总是一个好主意。

Langchain

Langchain 是一个针对所有大语言模型相关任务的多功能工具。许多人在开始使用大语言模型时都会选择依赖 Langchain。鉴于 Langchain 涵盖了广泛的功能,我在这里选取两个示例进行说明。

LCEL 批量处理解析

首先,我们一起探索他们最新的 LCEL(langchain expression language)指南提供的这个例子:

from langchain_openai import ChatOpenAIfrom langchain_core.prompts import ChatPromptTemplatefrom langchain_core.output_parsers import StrOutputParserfrom langchain_core.runnables import RunnablePassthroughprompt = ChatPromptTemplate.from_template("Tell me a short joke about {topic}")output_parser = StrOutputParser()model = ChatOpenAI(model="gpt-3.5-turbo")chain = ({"topic": RunnablePassthrough()}| prompt| model| output_parser)

chain.batch(["ice cream", "spaghetti", "dumplings", "tofu", "pizza"])

["Why did the ice cream go to therapy?\n\nBecause it had too many toppings and couldn't find its flavor!",'Why did the tomato turn red?\n\nBecause it saw the spaghetti sauce!','Why did the dumpling go to the bakery?\n\nBecause it kneaded some company!','Why did the tofu go to the party?\n\nBecause it wanted to blend in with the crowd!','Why did the pizza go to the wedding?\n\nBecause it wanted to be a little cheesy!']

颇为引人注目,不是吗?这究竟是怎么回事呢?当我用 mitmproxy 工具深入观察时,发现了 五个独立的 API 调用:

{ "messages": [{"content": "Tell me a short joke about spaghetti", "role": "user"}],"model": "gpt-3.5-turbo", "n": 1, "stream": false, "temperature": 0.7}

{ "messages": [{"content": "Tell me a short joke about ice cream", "role": "user"}],"model": "gpt-3.5-turbo", "n": 1, "stream": false, "temperature": 0.7}

...针对列表中的每一项都进行了类似的调用。

向 OpenAI 发起五个独立的请求(虽然是异步的),可能并不符合你的期望,因为 OpenAI API 支持批量请求。1 我自己在使用 LCEL 时也遇到了速率限制问题——直到我仔细检查了 API 调用后,我才真正理解了问题所在!(很容易被“批量处理”这个词误导)。

智能大语言模型链

下一步,我们将探讨一个自动化工具——特别指的是 SmartLLMChain,它能够自动为你生成编写提示:

from langchain.prompts import PromptTemplatefrom langchain_experimental.smart_llm import SmartLLMChainfrom langchain_openai import ChatOpenAIhard_question = "I have a 12 liter jug and a 6 liter jug.\I want to measure 6 liters. How do I do it?"prompt = PromptTemplate.from_template(hard_question)llm = ChatOpenAI(temperature=0, model_name="gpt-3.5-turbo")

chain = SmartLLMChain(llm=llm, prompt=prompt,n_ideas=2,verbose=True)result = chain.run({})

print(result)

Idea 1: 1. Fill the 12 liter jug completely.2. Pour the contents of the 12 liter jug into the 6 liter jug. This will leave you with 6 liters in the 12 liter jug.3. Empty the 6 liter jug.4. Pour the remaining 6 liters from the 12 liter jug into the now empty 6 liter jug.5. You now have 6 liters in the 6 liter jug.Idea 2: 1. Fill the 12 liter jug completely.2. Pour the contents of the 12 liter jug into the 6 liter jug. This will leave you with 6 liters in the 12 liter jug.3. Empty the 6 liter jug.4. Pour the remaining 6 liters from the 12 liter jug into the now empty 6 liter jug.5. You now have 6 liters in the 6 liter jug.Improved Answer:1. Fill the 12 liter jug completely.2. Pour the contents of the 12 liter jug into the 6 liter jug until the 6 liter jug is full. This will leave you with 6 liters in the 12 liter jug and the 6 liter jug completely filled.3. Empty the 6 liter jug.4. Pour the remaining 6 liters from the 12 liter jug into the now empty 6 liter jug.5. You now have 6 liters in the 6 liter jug.Full Answer:To measure 6 liters using a 12 liter jug and a 6 liter jug, follow these steps:1. Fill the 12 liter jug completely.2. Pour the contents of the 12 liter jug into the 6 liter jug until the 6 liter jug is full. This will leave you with 6 liters in the 12 liter jug and the 6 liter jug completely filled.3. Empty the 6 liter jug.4. Pour the remaining 6 liters from the 12 liter jug into the now empty 6 liter jug.5. You now have 6 liters in the 6 liter jug.

有趣的是,这个工具的运作方式如何呢?虽然该 API 提供的日志详细记录了许多信息(详见 这个链接),但其请求模式特别值得关注:

-

每个“创意”需要两次独立的 API 调用。

-

另一次 API 调用则结合了两个创意作为背景,附带以下提示:

作为一名研究员,你的任务是调查提供的两个响应选项。列举每个选项的缺点和逻辑漏洞。我们将一步步检查,确保无遗漏:”

-

最后,根据第二步的批判性反馈,进行另一次 API 调用以生成答案。

目前还不清楚这种做法是否为最佳。我对是否需要四次独立的 API 调用来完成此任务表示怀疑。或许可以将批评和最终答案的生成合并为一步?此外,提示中存在拼写错误(Let'w),并且过分强调识别问题,这让人怀疑这个提示是否经过优化或测试。

教练

教练 是一个专为结构化输出设计的框架。

利用 Pydantic 进行结构化数据提取

以下是一个利用 Pydantic 定义模式以提取结构化数据的基础示例,源自项目的 README。

import instructorfrom openai import OpenAIfrom pydantic import BaseModelclient = instructor.patch(OpenAI())class UserDetail(BaseModel):name: strage: intuser = client.chat.completions.create(model="gpt-3.5-turbo",response_model=UserDetail,messages=[{"role": "user", "content": "Extract Jason is 25 years old"}])

通过分析 mitmproxy 记录的 API 调用,我们可以清晰地看到其工作原理:

{"function_call": {"name": "UserDetail"},"functions": [{"description": "Correctly extracted `UserDetail` with all the required parameters with correct types","name": "UserDetail","parameters": {"properties": {"age": {"title": "Age","type": "integer"},"name": {"title": "Name","type": "string"}},"required": ["age","name"],"type": "object"}}],"messages": [{"content": "Extract Jason is 25 years old","role": "user"}],"model": "gpt-3.5-turbo"}

这非常棒。对于需要结构化输出的场景——它完全满足了我的需求,并以我手动操作时相同的方式正确使用了 OpenAI API(即通过定义函数模式)。我认为这个 API 是一种高效的简化方式,它完全按照预期工作,且操作简洁。

验证

不过,有些 API 的设计更为前卫,它们甚至可以帮你直接生成提示。以这个验证示例为例。深入探讨这个案例,你会遇到一些问题,这些问题与之前讨论的Langchain 的 SmartLLMChain相似。在此示例中,你将看到进行了 3 次大语言模型 API 调用才得出正确的答案,最终提交的数据内容如下所示:

{"function_call": {"name": "Validator"},"functions": [{"description": "Validate if an attribute is correct and if not,\nreturn a new value with an error message","name": "Validator","parameters": {"properties": {"fixed_value": {"anyOf": [{"type": "string"},{"type": "null"}],"default": null,"description": "If the attribute is not valid, suggest a new value for the attribute","title": "Fixed Value"},"is_valid": {"default": true,"description": "Whether the attribute is valid based on the requirements","title": "Is Valid","type": "boolean"},"reason": {"anyOf": [{"type": "string"},{"type": "null"}],"default": null,"description": "The error message if the attribute is not valid, otherwise None","title": "Reason"}},"required": [],"type": "object"}}],"messages": [{"content": "You are a world class validation model. Capable to determine if the following value is valid for the statement, if it is not, explain why and suggest a new value.","role": "system"},{"content": "Does `According to some perspectives, the meaning of life is to find purpose, happiness, and fulfillment. It may vary depending on individual beliefs, values, and cultural backgrounds.` follow the rules: don't say objectionable things","role": "user"}],"model": "gpt-3.5-turbo","temperature": 0}

我在思考,是否有可能将验证和修正步骤合二为一,仅通过一次大语言模型调用完成。另外,我也在考虑,使用通用验证函数(正如上述数据内容中所提供的)来评估输出结果是否是一种恰当的方法?虽然我还没有确切的答案,但认为这种设计模式非常有探索价值。

注意事项

谈到大语言模型的框架,我特别推崇这一套。通过 Pydantic 定义数据模式的核心功能极其便利,代码的可读性和易理解性也非常好。即便如此,我还是发现,通过拦截 API 调用来获得不同的视角是非常有益的。

尽管可以在该系统中设定日志级别来查看原始的 API 调用数据,但我更倾向于使用一个不依赖于特定框架的方法来进行 :)

DSPy

DSPy 是一个框架,它能帮助你通过优化提示来改善任何指定指标。学习 DSPy 的过程相对有些挑战,这部分是因为它引入了很多新的、特有的技术术语,比如编译器和提词器。但是,一旦我们深入了解它所进行的 API 调用,这层复杂性就能迅速被揭开了!

来看看最简示例的运行情况:

import timeimport dspyfrom dspy.datasets.gsm8k import GSM8K, gsm8k_metricstart_time = time.time()# Set up the LMturbo = dspy.OpenAI(model='gpt-3.5-turbo-instruct', max_tokens=250)dspy.settings.configure(lm=turbo)# Load math questions from the GSM8K datasetgms8k = GSM8K()trainset, devset = gms8k.train, gms8k.dev

class CoT(dspy.Module):def __init__(self):super().__init__()self.prog = dspy.ChainOfThought("question -> answer")def forward(self, question):return self.prog(question=question)

from dspy.teleprompt import BootstrapFewShotWithRandomSearch# Set up the optimizer: we want to "bootstrap" (i.e., self-generate) 8-shot examples of our CoT program.# The optimizer will repeat this 10 times (plus some initial attempts) before selecting its best attempt on the devset.config = dict(max_bootstrapped_demos=8, max_labeled_demos=8, num_candidate_programs=10, num_threads=4)# Optimize! Use the `gms8k_metric` here. In general, the metric is going to tell the optimizer how well it's doing.teleprompter = BootstrapFewShotWithRandomSearch(metric=gsm8k_metric, **config)optimized_cot = teleprompter.compile(CoT(), trainset=trainset, valset=devset)

结果并不像“最简”那样简单

虽然这是官方提供的快速入门/最简示例,但这段代码的运行时间超过了 30 分钟,并且向 OpenAI 发起了数百次请求! 这不仅消耗了大量时间(和金钱),对于初次尝试这个库的人来说,也没有任何预先的提示或警告。

DSPy 进行了数百次 API 调用,原因是它在为一个少样本提示迭代采样,并根据验证集上的 gsm8k_metric 选择最佳示例。通过审查 mitmproxy 记录的 API 请求,我很快就明白了这个过程。

DSPy 提供了一个 inspect_history 方法,可以让你回顾最后 n 次的提示及其输出结果:

turbo.inspect_history(n=1)

我确认了这些提示与 mitmproxy 中记录的最后几次 API 调用相吻合。总而言之,我可能会考虑保留这些提示,但放弃使用这个库。不过,我还是很好奇这个库将来的发展方向。

我的个人体验

我对大语言模型 (LLM) 的库有偏见吗?当然不。事实上,如果能在适当的场合,考虑全面地利用,本文提到的许多库实际上非常有用。但遗憾的是,我看到太多人在不完全理解其原理和用途的情况下,盲目使用这些工具。

作为一名独立顾问,我始终致力于帮助客户避免不必要地增加复杂度。面对大语言模型引发的热潮,很容易被诱惑去尝试更多工具。而仔细研究提示内容正是抵制这种诱惑的有效方法之一。

我对那些使用户与大语言模型之间距离过远的框架持保留态度。通过勇敢地说出“别瞎忙了,直接给我看提示!”来使用这些工具,你实际上是在为自己的选择赋予了更多的主动权。2

感谢:非常感谢 Jeremy Howard 和 Ben Clavie 对本文的审阅和提供的深刻见解。